Sparse approximation conditions¶

We now remove the assumption that \(x\) is \(K\)-sparse in \(\Phi\). This is indeed true for all real life signals as they are not truly sparse.

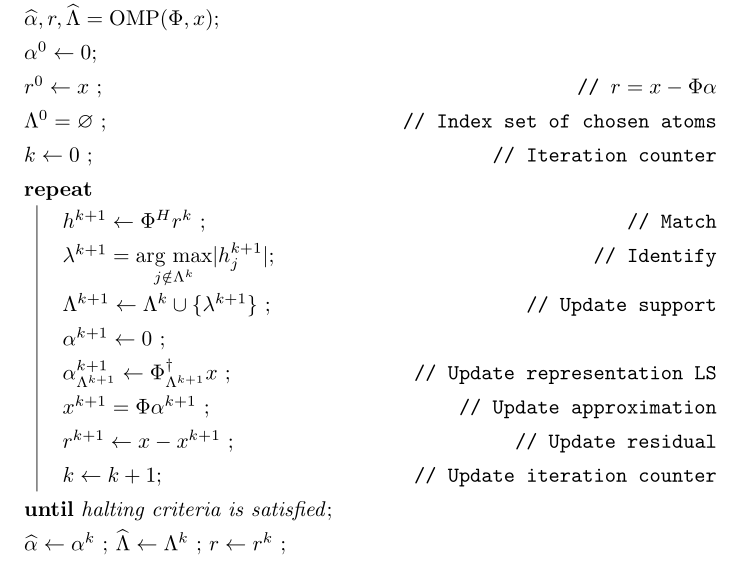

In this section we will look at conditions under which OMP can successfully solve the \((\mathcal{D}, K)\)-sparse approximation problem.

Let \(x\) be an arbitrary signal and suppose that \(\alpha_{\text{opt}}\) is an optimum \(K\)-term approximation representation of \(x\). i.e. \(\alpha_{\text{opt}}\) is a solution to (3) and the optimal \(K\)-term approximation of \(x\) is given by

We note that \(\alpha_{\text{opt}}\) may not be unique.

Let \(\Lambda_{\text{opt}}\) be the support of \(\alpha_{\text{opt}}\) which identifies the atoms in \(\Phi\) that participate in the \(K\)-term approximation of \(x\).

Once again let \(\Phi_{\text{opt}}\) be the sub-matrix consisting of columns of \(\Phi\) indexed by \(\Lambda_{\text{opt}}\).

We assume that columns in \(\Phi_{\text{opt}}\) are linearly independent. This is easily established since if any atom in this set were linearly dependent on other atoms, we could always find a more optimal solution.

Again let \(\Psi_{\text{opt}}\) be the matrix of \((D - K)\) columns which are not indexed by \(\Lambda_{\text{opt}}\).

We note that if \(\Lambda_{\text{opt}}\) is identified then finding \(\alpha_{\text{opt}}\) is a straightforward least squares problem.

We now present a condition under which Orthogonal Matching Pursuit is able to recover the optimal atoms.

Assume that \(\mu_1(K) < \frac{1}{2}\), and suppose that at \(k\)-th iteration, the support \(S^k\) for \(\alpha^k\) consists only of atoms from an optimal \(k\)-term approximation of the signal \(x\).At step \((k+1)\), Orthogonal Matching Pursuit will recover another atom indexed by \(\Lambda_{\text{opt}}\) whenever

A few remarks are in order.

- \(\| x - \Phi \alpha^k \|_2\) is the approximation error norm at \(k\)-th iteration.

- \(\| x - \Phi \alpha_{\text{opt}}\|_2\) is the optimum approximation error after \(K\) iterations.

- The theorem says that OMP makes absolute progress whenever the current error is larger than optimum error by a factor.

- As a result of this theorem, we note that every optimal \(K\)-term approximation of \(x\) contains the same kernel of atoms. The optimum error is always independent of choice of atoms in \(K\) term approximation (since it is optimum). Initial error is also independent of choice of atoms (since initial support is empty). OMP always selects the same set of atoms by design.

Let us assume that after \(k\) steps, OMP has recovered an approximation \(x^k\) given by

where \(S^k = \supp(\alpha^k)\) chooses \(k\) columns from \(\Phi\) all of which belong to \(\Phi_{\text{opt}}\).

Let the residual at \(k\)-th stage be

Recalling from previous section, a sufficient condition for recovering another optimal atom is

One difference from previous section is that \(r^k \notin \ColSpace(\Phi_{\text{opt}})\).

We can write

Note that \((x - x_{\text{opt}})\) is nothing but the residual left after \(K\) iterations.

We also note that since residual in OMP is always orthogonal to already selected columns, hence

We will now use these expressions to simplify \(\rho(r^k)\).

We now define two new terms

and

With these we have

Now \(x_{\text{opt}}\) has an exact \(K\)-term representation in \(\Phi\) given by \(\alpha_{\text{opt}}\). Hence \(\rho_{\text{opt}}(r^k)\) is nothing but \(\rho(r^k)\) for corresponding exact-sparse problem.

From the proof of here we recall

since

The remaining problem is \(\rho_{\text{err}}(r^k)\). Let us look at its numerator and denominator one by one.

\(\| \Psi_{\text{opt}}^H (x - x_{\text{opt}})\|_{\infty}\) essentially is the maximum (absolute) inner product between any column in \(\Psi_{\text{opt}}\) with \(x - x_{\text{opt}}\).

We can write

since all columns in \(\Phi\) are unit norm. In between we used Cauchy-Schwartz inequality.

Now look at denominator \(\| \Phi_{\text{opt}}^H (x_{\text{opt}} - x^k) \|_{\infty}\) where \((x_{\text{opt}} - x^k) \in \CC^N\) and \(\Phi_{\text{opt}} \in \CC^{N \times K}.\) Thus

Now for every \(v \in \CC^K\) we have

Hence

Since \(\Phi_{\text{opt}}\) has full column rank, hence its singular values are non-zero. Thus

From here we have

Combining these observations we get

Now from (2) \(\rho(r^k) <1\) whenever \(\rho_{\text{opt}}(r^k) + \rho_{\text{err}}(r^k) < 1\).

Thus a sufficient condition for \(\rho(r^k) <1\) can be written as

We need to simplify this expression a bit. Multiplying by \((1 - \mu_1(K))\) on both sides we get

We assumed \(\mu_1(K) < \frac{1}{2}\) thus \(1 - 2 \mu_1(K) > 0\) which validates the steps above.

Finally we remember that \((x - x_{\text{opt}}) \perp \ColSpace(\Phi_{\text{opt}})\) and \((x_{\text{opt}} - x^k) \in \ColSpace(\Phi_{\text{opt}})\) thus \((x - x_{\text{opt}})\) and \((x_{\text{opt}} - x^k)\) are orthogonal to each other. Thus by applying Pythagorean theorem we have

Thus we have

This gives us a sufficient condition

i.e. whenever (3) holds true, we have \(\rho(r^k) < 1\) which leads to OMP making a correct choice and choosing an atom from the optimal set.

Putting \(x^k = \Phi \alpha^k\) and \(x_{\text{opt}} = \Phi \alpha_{\text{opt}}\) we get back (1) which is the desired result.

This result establishes that as long as (1) holds for each of the steps from 1 to \(K\), OMP will recover a \(K\) term optimum approximation \(x_{\text{opt}}\). If \(x \in \CC^N\) is completely arbitrary, then it may not be possible that (1) holds for all the \(K\) iterations. In this situation, a question arises as to what is the worst \(K\)-term approximation error that OMP will incur if (1) doesn’t hold true all the way.

This is answered in following corollary of previous theorem.

Assume that \(\mu_1(K) < \frac{1}{2}\) and let \(x \in \CC^N\) be a completely arbitrary signal. Orthogonal Matching Pursuit produces a \(K\)-term approximation \(x^K\) which satisfies

where \(x_{\text{opt}}\) is the optimum \(K\)-term approximation of \(x\) in dictionary \(\DD\) (i.e. \(x_{\text{opt}} = \Phi \alpha_{\text{opt}}\) where \(\alpha_{\text{opt}}\) is an optimal solution of (3) ). \(C(\DD, K)\) is a constant depending upon the dictionary \(\DD\) and the desired sparsity level \(K\). An estimate of \(C(\DD, K)\) is given by

Suppose that OMP runs fine for first \(p\) steps where \(p < K\). Thus (1) keeps holding for first \(p\) steps. We now assume that (1) breaks at step \(p+1\) and OMP is no longer guaranteed to make an optimal choice of column from \(\Phi_{\text{opt}}\). Thus at step \(p+1\) we have

Any further iterations of OMP will only reduce the error further (although not in an optimal way). This gives us

Choosing

we can rewrite this as

This is a very useful result. It establishes that even if OMP is not able to recover the optimum \(K\)-term representation of \(x\), it always constructs an approximation whose error lies within a constant factor of optimum approximation error where the constant factor is given by \(\sqrt{1 + C(\DD, K)}\).

If the optimum approximation error \(\| x - x_{\text{opt}} \|_2\) is small then \(\| x - x^K \|_2\) will also be not too large.

If \(\| x - x_{\text{opt}} \|_2\) is moderate, then the OMP may inflate the approximation error to a higher value. But in this case, probably sparse approximation is not the right tool for signal representation over the dictionary.