Exact recovery conditions¶

Recall the \((\mathcal{D}, K)\)-exact-sparse problem discussed in Sparse approximation problem. OMP is a good and fast algorithm for solving this problem.

In terms of theoretical understanding, it is quite useful to know of certain conditions under which a sparse representation can be exactly recovered from a given signal using OMP. Such guarantees are known as exact recovery guarantees.

In this section, following Tropp in [Tro04], we will closely look at some conditions under which OMP is guaranteed to recover the solution for \((\mathcal{D}, K)\)-exact-sparse problem.

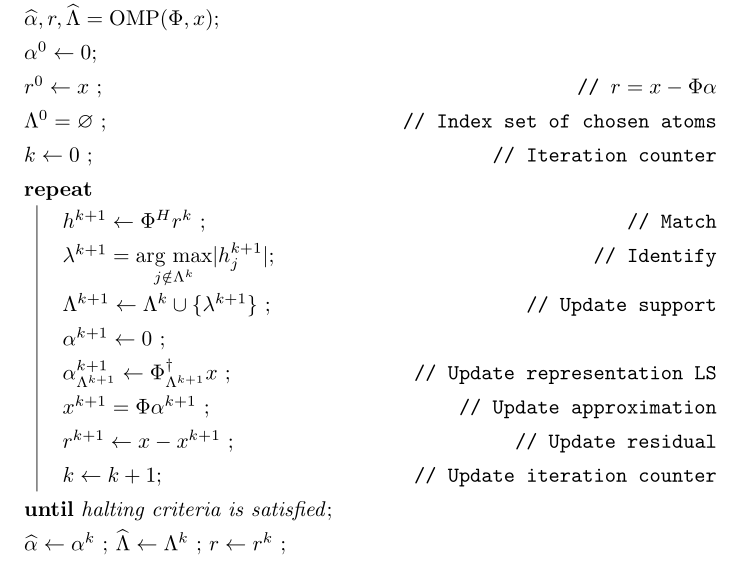

We rephrase the OMP algorithm following the conventions in \((\mathcal{D}, K)\)-exact-sparse problem.

It is known that \(x = \Phi \alpha\) where \(\alpha\) contains at most \(K\) non-zero entries. Both the support and entries of \(\alpha\) are known. OMP is only given \(\Phi\), \(x\) and \(K\) and is estimating \(\alpha\). The estimate returned by OMP is denoted as \(\widehat{\alpha}\).

Let \(\Lambda_{\text{opt}} = \supp(\alpha)\) be the set of indices at which optimal representation \(\alpha\) has non-zero entries. Then we can write

From the synthesis matrix \(\Phi\) we can extract a \(N \times K\) matrix \(\Phi_{\text{opt}}\) whose columns are indexed by \(\Lambda_{\text{opt}}\).

where \(\lambda_i \in \Lambda_{\text{opt}}\). Thus, we can also write

where \(\alpha_{\text{opt}} \in \CC^K\) is a vector of \(K\) complex entries.

Now the columns of optimum \(\Phi_{\text{opt}}\) are linearly independent. Hence \(\Phi_{\text{opt}}\) has full column rank.

We define another matrix \(\Psi_{\text{opt}}\) whose columns are the remaining \(D - K\) columns of \(\Phi\). Thus \(\Psi_{\text{opt}}\) consists of atoms or columns which do not participate in the optimum representation of \(x\).

OMP starts with an empty support. In every step, it picks up one column from \(\Phi\) and adds to the support of approximation. If we can ensure that it never selects any column from \(\Psi_{\text{opt}}\) we will be guaranteed that correct \(K\) sparse representation is recovered.

We will use mathematical induction and assume that OMP has succeeded in its first \(k\) steps and has chosen \(k\) columns from \(\Phi_{\text{opt}}\) so far. At this point it is left with the residual \(r^k\).

In \((k+1)\)-th iteration, we compute inner product of \(r^k\) with all columns in \(\Phi\) and choose the column which has highest inner product.

We note that maximum value of inner product of \(r^k\) with any of the columns in \(\Psi_{\text{opt}}\) is given by

Correspondingly, maximum value of inner product of \(r^k\) with any of the columns in \(\Phi_{\text{opt}}\) is given by

Actually since we have already shown that \(r^k\) is orthogonal to the columns already chosen, hence they will not contribute to this equation.

In order to make sure that none of the columns in \(\Psi_{\text{opt}}\) is selected, we need

We define a ratio

This ratio is known as greedy selection ratio.

We can see that as long as \(\rho(r^k) < 1\), OMP will make a right decision at \((k+1)\)-th stage. If \(\rho(r^k) = 1\) then there is no guarantee that OMP will make the right decision. We will assume pessimistically that OMP makes wrong decision in such situations.

We note that this definition of \(\rho(r^k)\) looks very similar to matrix \(p\)-norms defined in p-norm for matrices. It is suggested to review the properties of \(p\)-norms for matrices at this point.

We now present a condition which guarantees that \(\rho(r^k) < 1\) is always satisfied.

A sufficient condition for Orthogonal Matching Pursuit to resolve \(x\) completely in \(K\) steps is that

where \(\psi\) ranges over columns in \(\Psi_{\text{opt}}\).

Moreover, Orthogonal Matching Pursuit is a correct algorithm for \((\mathcal{D}, K)\)-exact-sparse problem whenever the condition holds for every superposition of \(K\) atoms from \(\DD\).

In (2) \(\Phi_{\text{opt}}^{\dag}\) is the pseudo-inverse of \(\Phi\)

What we need to show is if (2) holds true then \(\rho(r^k)\) will always be less than 1.

We note that the projection operator for the column span of \(\Phi_{\text{opt}}\) is given by

We also note that by assumption since \(x \in \ColSpace(\Phi_{\text{opt}})\) and the approximation at the \(k\)-th step, \(x^k = \Phi \alpha^k \in \ColSpace(\Phi_{\text{opt}})\), hence \(r^k = x - x^k\) also belongs to \(\ColSpace(\Phi_{\text{opt}})\).

Thus

i.e. applying the projection operator for \(\Phi_{\text{opt}}\) on \(r^k\) doesn’t change it.

Using this we can rewrite \(\rho(r^k)\) as

We see \(\Phi_{\text{opt}}^H r^k\) appearing both in numerator and denominator.

Now consider the matrix \(\Psi_{\text{opt}}^H (\Phi_{\text{opt}}^{\dag})^H\) and recall the definition of matrix \(\infty\)-norm from here

Thus

which gives us

Finally we recall that \(\| A\|_{\infty}\) is max row sum norm while \(\| A\|_1\) is max column sum norm. Hence

which means

Thus

Now the columns of \(\Phi_{\text{opt}}^{\dag} \Psi_{\text{opt}}\) are nothing but \(\Phi_{\text{opt}}^{\dag} \psi\) where \(\psi\) ranges over columns of \(\Psi_{\text{opt}}\).

Thus in terms of max column sum norm

Thus assuming that OMP has made \(k\) correct decision and \(r^k\) lies in \(\ColSpace( \Phi_{\text{opt}})\), \(\rho(r^k) < 1\) whenever

Finally the initial residual \(r^0 = 0\) which always lies in column space of \(\Phi_{\text{opt}}\). By above logic, OMP will always select an optimal column in each step. Since the residual is always orthogonal to the columns already selected, hence it will never select the same column twice. Thus in \(K\) steps it will retrieve all \(K\) atoms which comprise \(x\).

Babel function estimates¶

There is a small problem with this result. Since we don’t know the support a-priori hence its not possible to verify that

holds. Of course, verifying this for all \(K\) column sub-matrices is computationally prohibitive.

It turns out that Babel function (recall from Babel function) is there to help. We show how Babel function guarantees that exact recovery condition for OMP holds.

Suppose that \(\mu_1\) is the Babel function for a dictionary \(\DD\) with synthesis matrix \(\Phi\). The exact recovery condition holds whenever

Thus, Orthogonal Matching Pursuit is a correct algorithm for \((\mathcal{D}, K)\)-exact-sparse problem whenever (3) holds.

In other words, for sufficiently small \(K\) for which (3) holds, OMP will recover any arbitrary superposition of \(K\) atoms from \(\DD\).

We can write

We recall from here that operator-norm is subordinate i.e.

Thus with \(A = (\Phi_{\text{opt}}^H \Phi_{\text{opt}})^{-1}\) we have

With this we have

Now let us look at \(\| \Phi_{\text{opt}}^H \psi \|_1\) closely. There are \(K\) columns in \(\Phi_{\text{opt}}\). For each column we compute its inner product with \(\psi\). And then absolute sum of the inner product.

Also recall the definition of Babel function:

Clearly

We also need to provide a bound on \(\| (\Phi_{\text{opt}}^H \Phi_{\text{opt}})^{-1} \|_1\) which requires more work.

First note that since all columns in \(\Phi\) are unit norm, hence the diagonal of \(\Phi_{\text{opt}}^H \Phi_{\text{opt}}\) contains unit entries. Thus we can write

where \(A\) contains the off diagonal terms in \(\Phi_{\text{opt}}^H \Phi_{\text{opt}}\).

Looking carefully , each column of \(A\) lists the inner products between one atom of \(\Phi_{\text{opt}}\) and the remaining \(K-1\) atoms. By definition of Babel function

Now whenever \(\| A \|_1 < 1\) then the Von Neumann series \(\sum(-A)^k\) converges to the inverse \((I_K + A)^{-1}\).

Thus we have

Thus putting things together we get

Thus whenever

we have