Rademacher sensing matrices¶

In this section we collect several results related to Rademacher sensing matrices.

A Rademacher sensing matrix \(\Phi \in \RR^{M \times N}\) with \(M < N\) is constructed by drawing each entry \(\phi_{ij}\) independently from a Radamacher random distribution given by

Thus \(\phi_{ij}\) takes a value \(\pm \frac{1}{\sqrt{M}}\) with equal probability.

We can remove the scale factor \(\frac{1}{\sqrt{M}}\) out of the matrix \(\Phi\) writing

With that we can draw individual entries of \(\Chi\) from a simpler Rademacher distribution given by

Thus entries in \(\Chi\) take values of \(\pm 1\) with equal probability.

This construction is useful since it allows us to implement the multiplication with \(\Phi\) in terms of just additions and subtractions. The scaling can be implemented towards the end in the signal processing chain.

We note that

Actually we have a better result with

We can write

where \(\phi_j \in \RR^M\) is a Rademacher random vector with independent entries.

We note that

Actually in this case we also have

Thus the squared length of each of the columns in \(\Phi\) is \(1\) .

Let \(z \in \RR^M\) be a Rademacher random vector with i.i.d entries \(z_i\) that take a value \(\pm \frac{1}{\sqrt{M}}\) with equal probability. Let \(u \in \RR^M\) be an arbitrary unit norm vector. Then

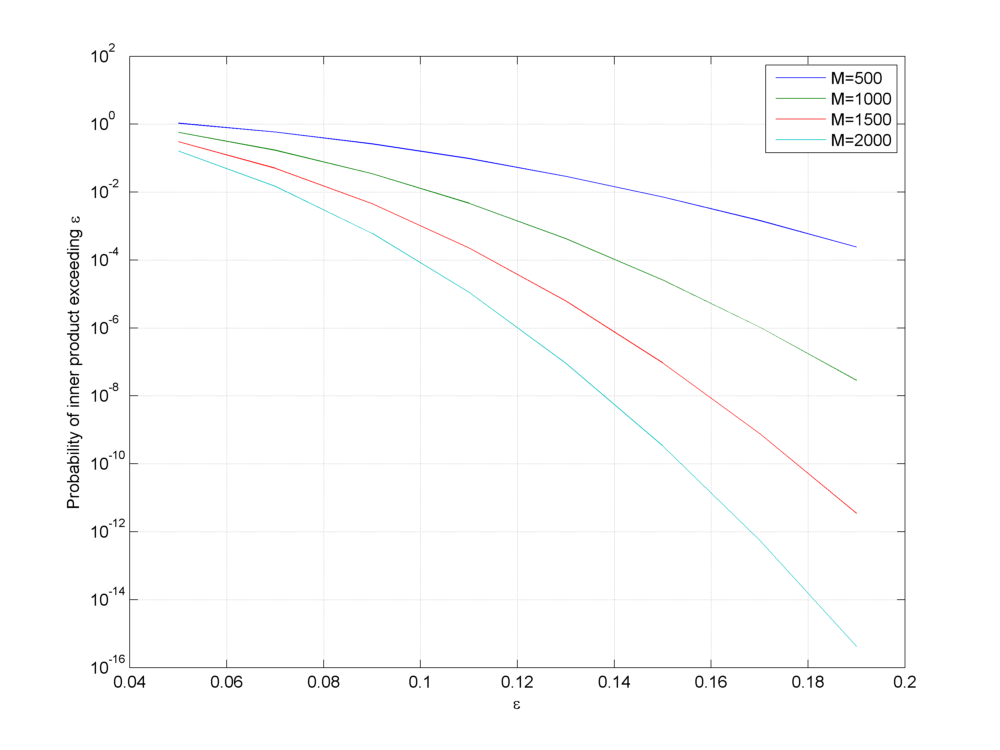

Representative values of this bound are plotted below.

Tail bound for the probability of inner product of a Rademacher random vector with a unit norm vector

A particular application of this lemma is when \(u\) itself is another (independently chosen) unit norm Rademacher random vector.

The lemma establishes that the probability of inner product of two independent unit norm Rademacher random vectors being large is very very small. In other words, independently chosen unit norm Rademacher random vectors are incoherent with high probability. This is a very useful result as we will see later in measurement of coherence of Rademacher sensing matrices.

Joint correlation¶

Columns of \(\Phi\) satisfy a joint correlation property ([TG07]) which is described in following lemma.

Let \(\{u_k\}\) be a sequence of \(K\) vectors (where \(u_k \in \RR^M\) ) whose \(l_2\) norms do not exceed one. Independently choose \(z \in \RR^M\) to be a random vector with i.i.d. entries \(z_i\) that take a value \(\pm \frac{1}{\sqrt{M}}\) with equal probability. Then

Let us call \(\gamma = \max_{k} | \langle z, u_k\rangle |\) .

We note that if for any \(u_k\) , \(\| u_k \|_2 <1\) and we increase the length of \(u_k\) by scaling it, then \(\gamma\) will not decrease and hence \(\PP(\gamma \leq \epsilon)\) will not increase. Thus if we prove the bound for vectors \(u_k\) with \(\| u_k\|_2 = 1 \Forall 1 \leq k \leq K\) , it will be applicable for all \(u_k\) whose \(l_2\) norms do not exceed one. Hence we will assume that \(\| u_k \|_2 = 1\) .

From previous lemma we have

Now the event

i.e. if any of the inner products (absolute value) is greater than \(\epsilon\) then the maximum is greater.

We recall Boole’s inequality which states that

Thus

This gives us

Coherence of Rademacher sensing matrix¶

We show that coherence of Rademacher sensing matrix is fairly small with high probability (adapted from [TG07]).

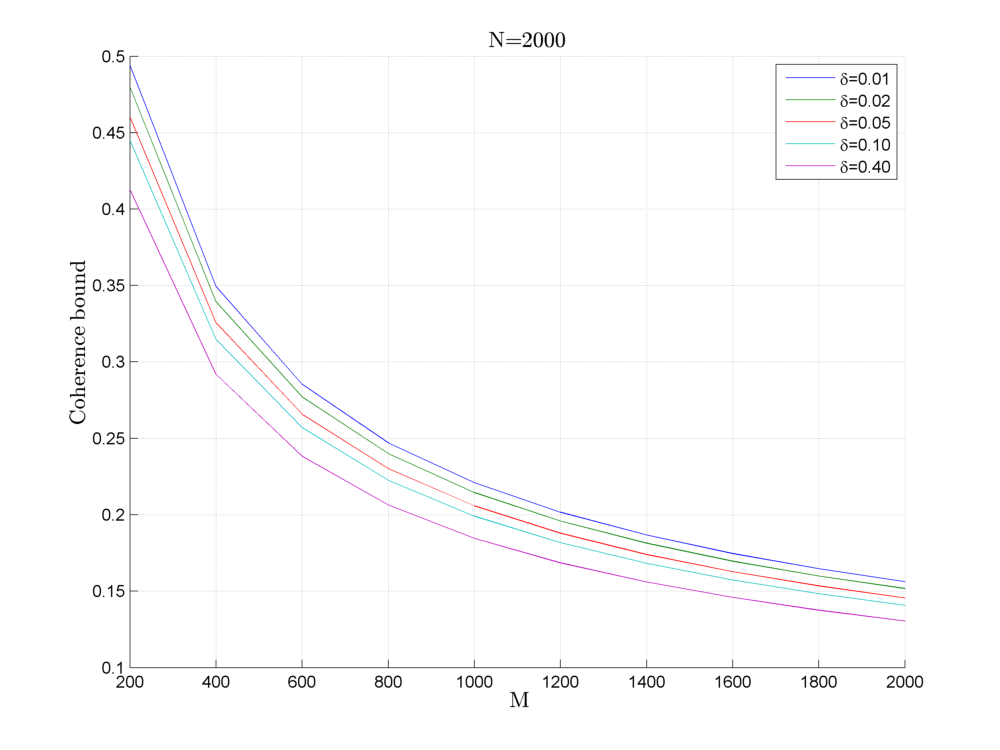

Fix \(\delta \in (0,1)\) . For an \(M \times N\) Rademacher sensing matrix \(\Phi\) as defined above, the coherence statistic

with probability exceeding \(1 - \delta\) .

Coherence bounds for Rademacher sensing matrices

We recall the definition of coherence as

Since \(\Phi\) is a Rademacher sensing matrix hence each column of \(\Phi\) is unit norm column. Consider some \(1 \leq j < k \leq N\) identifying columns \(\phi_j\) and \(\phi_k\) . We note that they are independent of each other. Thus from above we have

Now there are \(\frac{N(N-1)}{2}\) such pairs of \((j, k)\) . Hence by applying Boole’s inequality

Thus, we have

What we need to do now is to choose a suitable value of \(\epsilon\) so that the R.H.S. of this inequality is simplified.

We choose

This gives us

Putting back we get

This justifies why we need \(\delta \in (0,1)\) .

Finally

and

which completes the proof.