Soft Thresholding¶

Given a function \(f : \RR^n \to (-\infty, \infty]\), the proximal mapping of \(f\) is the operator given by

The proximal mapping in general is a point to set mapping. i.e. it maps each point \(x \in \RR^n\) to a set of points in \(\RR^n\) which minimize the expression on the R.H.S.

When \(f\) is a proper closed convex function, then the set \(\text{prox}_f(x)\) is a singleton for each \(x \in \RR^n\). In this case, proximal mapping can be treated as a single valued mapping or a function.

Let

To minimize \(g\) over \(u\), we differentiate \(g\) w.r.t. \(u\).

The minimum value is achieved at \(u = x\).

A simpler argument is that \(g\) is a constant plus a quadratic. Thus, the minimum of \(g\) is achieved when the quadratic is 0 which happens when \(x = u\).

Let

To minimize \(g\) over \(u\), we differentiate \(g\) w.r.t. \(u\).

The minimum value is achieved at \(u = x - 1\).

Let \(f\) be defined as:

Then \(\text{prox}_f(x)\) is \([x - \lambda]_+\).

This is one-sided soft-thresholding.

Note that \(f\) is differentiable for \(x > 0\). \(\text{prox}_f(x)\) is the minimizer of the function

where

\(g\) is a proper, convex and closed function. \(g\) is differentiable for \(u > 0\) with \(g'(u) = g_1'(u)\) for \(u > 0\). The only point where \(g\) is non-differentiable is \(u = 0\) in the domain of \(g\) which is \(u \geq 0\).

If \(g'(u) = 0\), then \(u\) is a minimizer of \(g\) (since it is a convex function).

\(g_1'(u) = 0\) iff \(u = x - \lambda\). If \(x > \lambda\), then \(g'(x-\lambda) = g_1'(x-\lambda) = 0\). Thus, \(\text{prox}_f(x) = x - \lambda\) for \(x > \lambda\).

Now if \(x \leq \lambda\), the \(g'(u)\) is never 0 wherever \(g\) is differentiable. Since a minimum of \(g\) exists, it must be attained at a point of non-differentiability. The only point of non-differentiability is \(u = 0\). Thus, \(\text{prox}_f(x) = 0\) for \(x \leq \lambda\).

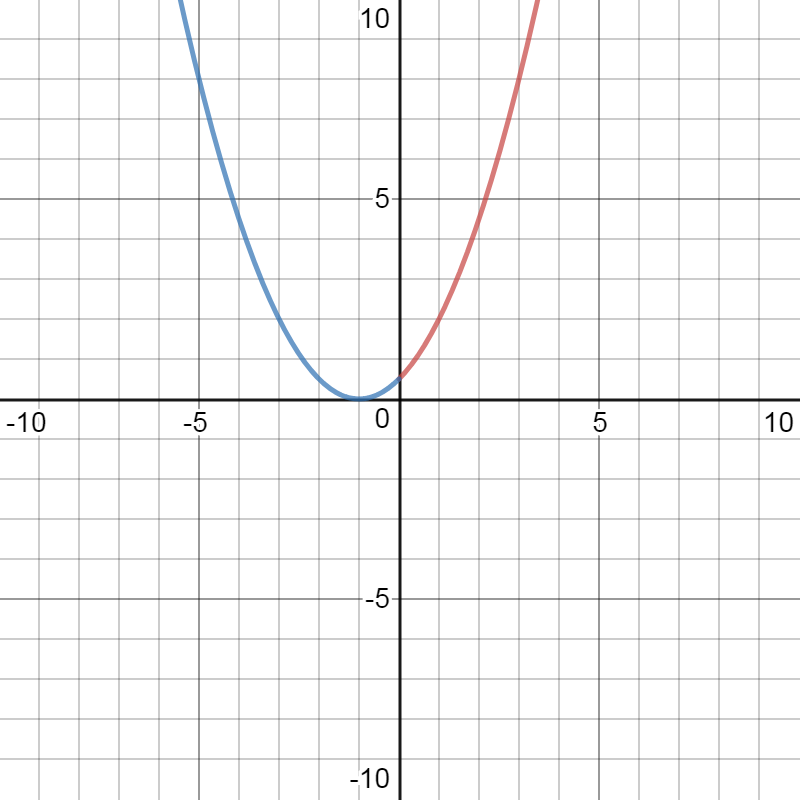

The quadratic \(g(u) = 2u + \frac{1}{2} (u - 1)^2\). If the \(2u\) term was not present, the minimum would have been \(u = 1\). The term \(2u\) just shifts the minimum left by 2 to \(u = -1\). However, if the domain of the function is restricted to \(u \geq 0\) (the red part of the graph), then the minimum is achieved at \(u = 0\).

Let \(f : \RR \to \RR\) be defined as \(f(x) = \lambda |x|\), then

Note that \(f\) is differentiable for \(x \neq 0\). \(\text{prox}_f(x)\) is the minimizer of the function

If a minimizer is obtained at \(u > 0\), then \(0 = f'(u) = g_1'(u) = \lambda + u - x\) giving us \(u = x - \lambda\). Since, we assumed that \(u > 0\), hence \(x > \lambda\). In other words, if \(x > \lambda\), then \(u = x - \lambda\) is the minimizer. Similarly, for \(x < -\lambda\), \(u = x + \lambda\) is the minimizer. For \(-\lambda \leq x \leq \lambda\), the minimizer is at the only point of non-differentiability for \(g_1\) which is \(u = 0\). Thus

This is nothing but shrinkage operator or soft thresholding operator.

The soft thresholding operator \(S: \RR \to \RR\) is defined as:

An equivalent definition is

The minimizer of

is \(S_{\lambda}(u)\).

This is a straight-forward application of the proximal operator result above.

The minimizer of

is \(S_{\lambda/\rho}(u)\).

\(f\) is differentiable everywhere except at \(x = 0\).

If a minimizer is obtained at \(x > 0\), then

giving us \(x = u - \frac{\lambda}{\rho}\). This holds true if \(u > \frac{\lambda}{\rho}\). Rest of the argument is similar.

Element-wise soft thresholding operator \(S_{\lambda} : \RR^n \to \RR^n\) is defined as

The minimizer of \(f: \RR^n \to \RR\) defined as

is \(S_{\lambda/\rho}(u)\) (applied element-wise).

The result follows from the fact that \(f(x)\) is separable as \(f(x) = \sum f_i(x_i)\) where

Element-wise soft thresholding operator over matrices \(S_{\lambda} : \RR^{m\times n} \to \RR^{m\times n}\) is defined as

The minimizer of \(f: \RR^{m \times n} \to \RR\) defined as

is \(S_{\lambda/\rho}(U)\) (applied element-wise).

See Row column norms for formal definition of \(\| \cdot \|_{1,1}\) norm. It is essentially the absolute sum of all elements of a matrix.